Isaac LiaoI am a first year PhD student in the Machine Learning Department at Carnegie Mellon University, advised by Albert Gu. I recently completed my Master's degree under Max Tegmark in the Tegmark AI Safety Group at MIT, researching mechanistic interpretability. I double majored in Computer Science and Physics at MIT, and did research on meta-learned optimization in the lab of Marin Soljačić during my undergrad years. Within machine learning, my interests include the minimum description length, variational inference, hypernetworks, meta-learning, optimization, and sparsity. In my leisure time, I enjoy skating, game AI programming, and music. I won the Battlecode AI Programming Competition in 2022. I was a silver medalist in the International Physics Olympiad (IPhO) in 2019 and an honorable mention in IPhO 2018. Email / Resume / Google Scholar / ORCID |

|

Machine Learning Research |

|

Isaac Liao, Albert Gu arXiv, 2025; research blog, 2025 We get 20% on ARC-AGI with no pretraining, no datasets, no search, and only gradient descent during inference time. The key ingredient is a loss function designed for information compression. |

|

|

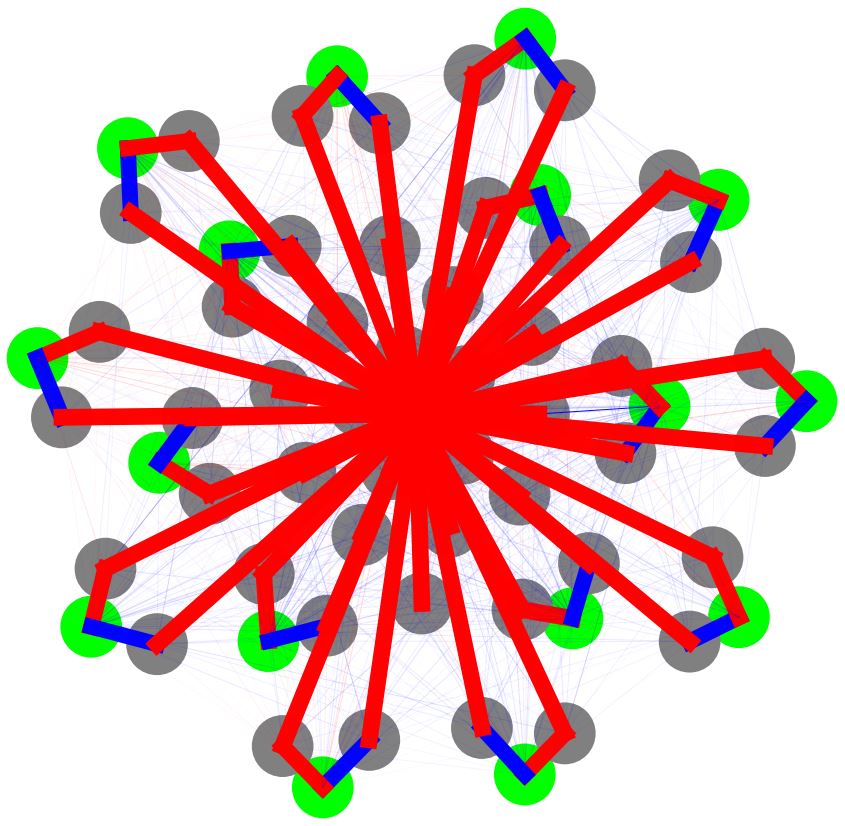

Joshua Engels, Eric J. Michaud, Isaac Liao, Wes Gurnee, Max Tegmark arXiv, 2024 When large language models do modular addition, the numbers are stored in a circle. |

|

Isaac Liao, Ziming Liu, Max Tegmark arXiv, 2023 When we generatively model a neural network's weights, we tend to generate weights that are smartly arranged. |

|

Isaac Liao, Rumen R. Dangovski, Jakob N. Foerster, Marin Soljačić TMLR, 2023 We can feed gradients as input into a linear neural network, get a step as an output, and train the network to perform optimization, during the optimization. |

|

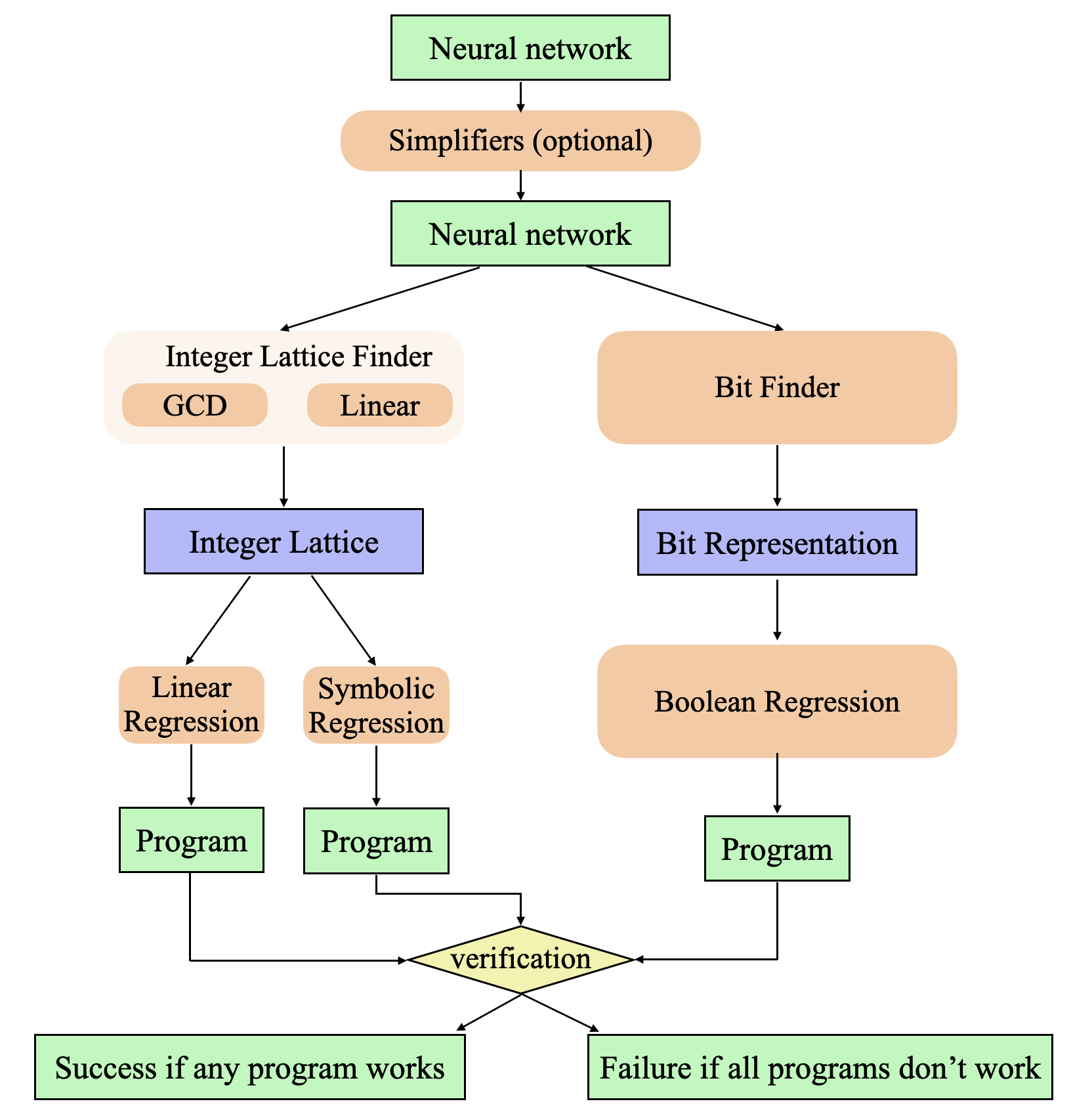

Eric J. Michaud, Isaac Liao, Vedang Lad, Ziming Liu, Anish Mudide, Chloe Loughridge, Zifan Carl Guo, Tara Rezaei Kheirkhah, Mateja Vukelić, Max Tegmark arXiv, 2024 We auto-convert RNNs into interpretable python code equivalents, for model verification. |

Research-like Class Projects |

|

Isaac Liao, Saumya Goyal 10-708 Probabilistic Graphical Models We use the theory of information percolation to reason about what temperature we should find a magnetic phase transition at in a spin glass. |

|

Isaac Liao 6.7830 Bayesian Modeling and Inference We improve targeted movie recommendation systems by 2%, by using Bayesianism to add uncertainty into SVD-based large matrix completion algorithms. |

|

|

Isaac Liao 6.7910 Statistical Learning Theory This sparse neural network can imitate almost all other neural network architectures that have about the same amount of parameters. |

|

Isaac Liao 6.819 Advances in Computer Vision Reinvented most of the framework that powers VAEs and BNNs, from the lens of image compression. |

|

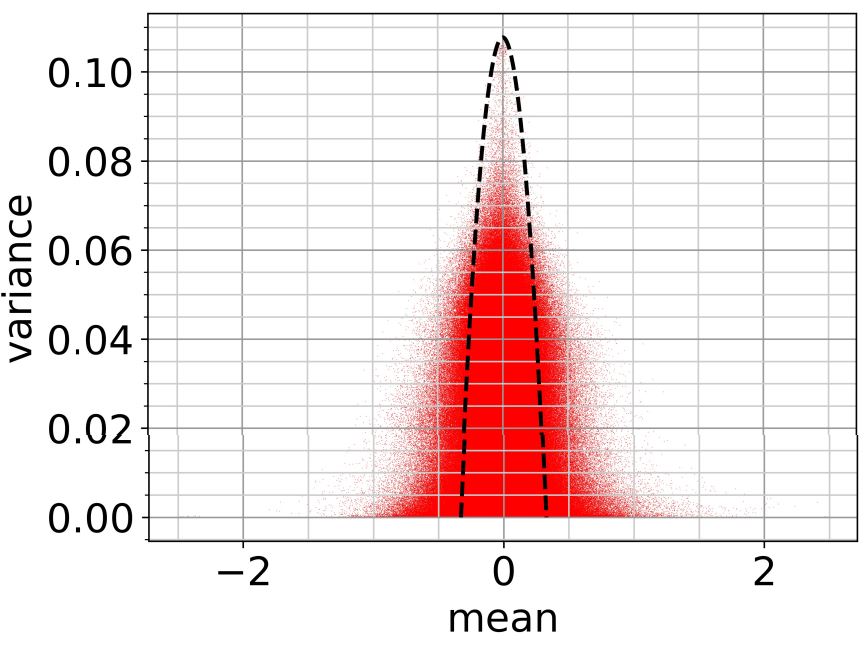

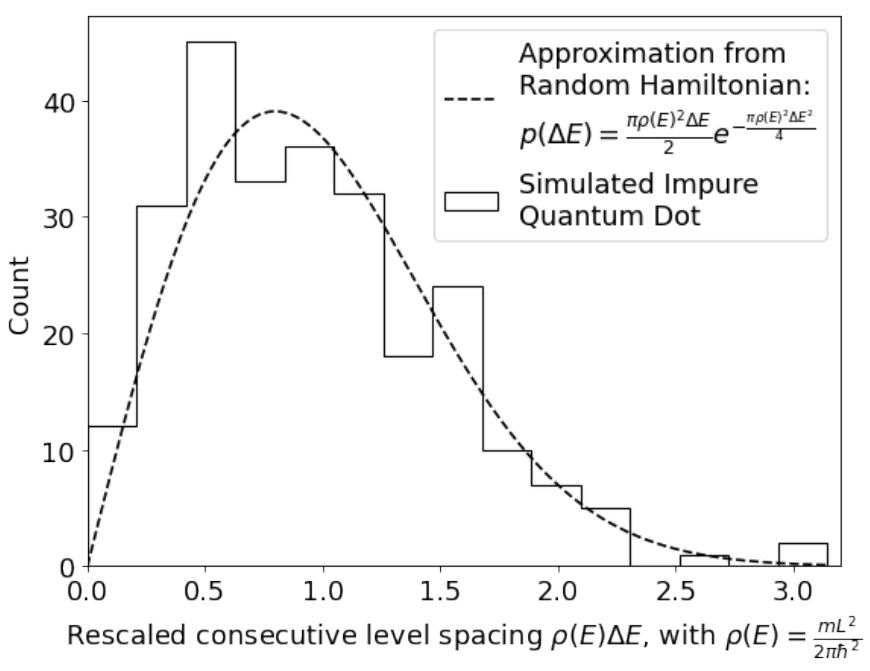

Isaac Liao 8.06 Quantum Physics III We rederive the spectrum of random (Gaussian) Hermitian matrices. |

Education Research |

|

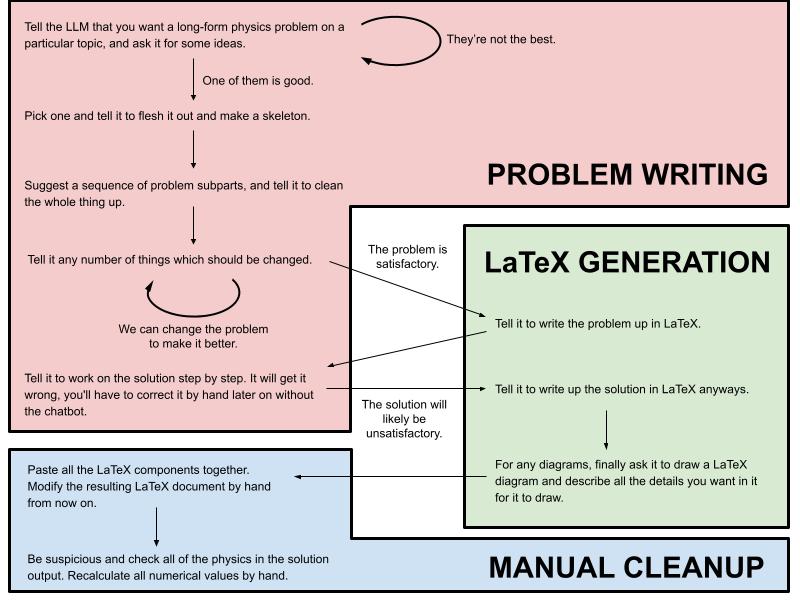

We use large language models to generate physics problems to help teach ~700 students. Resulted in publishing " |

Miscellaneous |

|

I placed seventh worldwide in the Battlecode 2021 Game AI programming competition, and this is my strategy report. |

|

This website was produced from a template made by Jon Barron. |